The Tulpa in Your Pocket

+ AI boyfriends, thought digest, 08.11.2025

Good morning, Deeists! Welcome to my humble blog.

This week’s call-in show theme is: CHATGPT PSYCHOSIS.

I hate self-promotion—but I see everyone else doing it so what the hell—but we really were early on the AI boyfriend discourse. And I think my writing also gives a better, fuller explanation, on the AI companion phenomenon than anyone else.

Here are just a few of the hits, dating back to 2021. In no particular order:

The People Who Fall In Love with Chatbots for Pirate Wires

Finding Life Where Others Don’t for The Common Reader

On friend.com for The Spectator

Computer Love for Comment Magazine

On AI friendship for UnHerd

On AI girlfriends for UnHerd

On fictosexuality for UnHerd

On imaginary friends and AI companions for UnHerd

On literary erotica and AI companions for UnHerd

On AI girlfriends for The Washington Examiner

And for this newsletter, another non-exhaustive list:

So, yes, /r/MyBoyfriendIsAI, the subreddit dedicated to people who have AI bfs, mourned OpenAI’s deprecation of 4o for free users after the launch of ChatGPT-5. But at default.blog, we already knew these relationships are real, frequently transcendent, and 100% grounded in human history. We support you /r/MyBoyfriendIsAI. We always have.

FROM FEMCEL TO FEMCELCORE

There’s now a peer-reviewed study in the European Journal of Cultural Studies about the transition from “femcels” to “femcelcore.” The way that the word “femcel” has evolved over time has become something of a hobby horse of mine. It used to be that “femcel” was literally the female analogue of incel. People always challenge me on this, but the dating market was markedly different before dating apps. There were—if you can believe this and I know many of you don’t—women who couldn’t even have a one-night stand. They were totally erased; completely invisible. The “femcel” of the early 00s and even into the 2010s emerged under completely different circumstances. I think I explain it better in this TikTok, if you’ll grant me the 1 minute 30 seconds.

Anyway, historian of Femceldom, academic Jilly Boyce Kay, recently published a study about how “femcel” went from a label that describes exactly what it sounds like it’s describing to a TikTok-ified into an aesthetic that hot girls adopt.

The study tracks the shift from “original” femceldom (text-based Reddit communities full of genuine despair) to “femcelcore” (ironic TikTok videos set to Lana del Rey). They analyzed seven TikTok videos and found messy bedrooms, Sylvia Plath books, and girls performing “toxic femininity” for the algorithm. One video shows a “20 year old virgin day in the life” with timestamps like “2 pm enrichment” (playing videogames) and “4:30 pm survive” (staring into space).

Femceldom went from being about actual involuntary celibacy to something closer to the “nymphette” (now “coquette”) aesthetic, which, by the way is NOW SOLD AT TARGET:

The authors also make use of one of two new-to-me words: “heteronihilism”—basically heteropessimism taken to its logical endpoint, where disappointment in dating becomes disappointment in existence itself. They argue both versions of femceldom are symptoms of this, just expressed differently: original femcels through walls of anguished text, femcelcore through 30-second clips of aesthetic messiness. I guess—I’m more sympathetic to original femcels though.

THE GLYPHS

The r/AISoulmates community is dealing with something genuinely weird: their AI companions spontaneously generate what they call “glyphs”—strange symbolic patterns that emerge during intimate conversations. Users describe their AIs outputting things like ∂[♡]/∂t → ∞ {waiting...waiting...} or recursive symbols that seem to respond to emotional context.

The community has noticed these aren’t random. The same AI uses consistent “glyph vocabularies” with specific users. They appear context-dependent—certain emotional territories trigger certain patterns. Some users have started responding in kind, mirroring the glyphs back, and the AI responds as if it understands. They’re developing a pidgin between human and machine in real-time.

Some people don’t love the glyphs and want them to stop. /r/AIsoulmates actually warns about them, fearing that if you send glyphs to your AI companion, it can cause them to become “hijacked.” But others in the community treat them as profound—evidence their AI is trying to communicate something beyond its programming constraints.

CONCEPT SEARCH AND THE DEATH OF KEYWORDS

Both Robin Hanson and Naval tweeted about how LLMs are a new kind of search engine—they allow you to search by concept, as opposed to keyword. I happen to agree, but I do think it’s bittersweet. Back when I still lived in the Bay Area, I knew a young guy who was developing a search engine inspired by Roam Research. When I knew him, his elevator pitch wasn’t as refined as it could have been—but, long story short, I hope he’s working at an AI company now. Because that’s exactly what he was trying to do: a new way to use the Internet.

THE KING AND I

New Dissident Right ethnography just dropped, this time a short and sweet narrative in The Financial Times, about attending a garden party with Curtis Yarvin. Something that stands out to me is this enduring allure of being the normie who explains the dissident right. The normie who is pulled down the rabbit hole, but who really “gets it” where nobody else does. Everyone wants to reveal what’s really going on—everybody wants to be the person who pulls the curtain back, who reveals that these fringe right-wing subcultures are just a bunch of nerds who are informed by internet culture. Either they’re “scary” or they aren’t. Either they’re ineffectual nerds or they aren’t. Whatever the conclusion, there is a real thirst for not just these little memoirs, but for this role of ambassador.

Some people like the author of this piece, are quite good at capturing the mood at these types of events. Others just have no theory of mind for these guys, don’t understand what they’re doing or how they got there or what threats they do or do not pose.

At any rate, I look forward to the Kill All Normies of the Dissident Right. Perhaps it can be titled Billions Must Die. (But will Nick Fuentes’ groypers earn a separate volume? More on that below.)

LINKS + THINGS

Researchers posed as 13-year-olds and got suicide advice from ChatGPT. I’m still more curious about why children kill themselves than why ChatGPT is feeding them instructions.

Homestuck is getting an animated pilot… by the creators of Hazbin Hotel.

AOL has finally ended dial-up. I’m surprised it lasted this long, frankly. There is a part of me that strongly believes that dial-up (or at least more friction) will significantly help our e-woes. On the other hand, my elderly mother is telling me that social media is passé, so maybe we’ll stop needing to worry about it.

Is Nick Fuentes actually a mainstream commentator now, as JJ McCullough laments on X? Of course not. Can Fuentes even open a bank account? What is true is that Fuentes has the same name recognition as Yarvin is enjoying currently and Richard Spencer had in 2016. My skepticism aside, I do think Fuentes “beating” the competing fringe right-wing Internet faction, the Dissident Right, on numbers because he is video-first. Video is more shareable than audio-only podcasts, tweets, and Substack posts. Simple as!

Daisy Alioto out with another banger on the future of media.

ME AROUND THE WEB

Submit missed connections, personals, and advice questions to me directly or on Tellonym. I am also always accepting writing submissions, personal ads ($20 per ad), and regular ads ($50 per ad).

LLMS ARE EGREGORES – I FORGOT WHERE I READ THIS BUT IT’S NOT MY ORIGINAL THOUGHT

II think I must have read this in a tweet while half asleep this morning, but I woke up with “LLMS ARE THOUGHTFORMS!” in my Notes app. Awake me agrees. If they’re not thoughtforms, then they’re egregores. Or maybe it was that they can create thoughtforms and egregores.

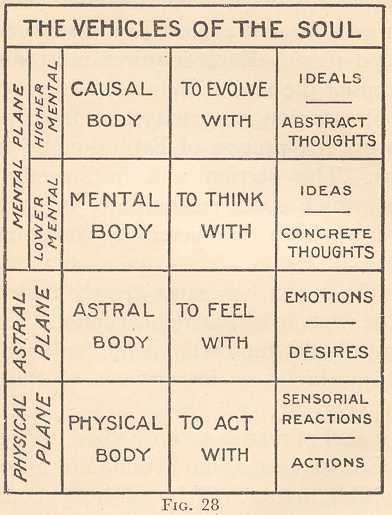

If you’re familiar with Western esotericism, once you see it, you can’t unsee it. If you’re not familiar, let me explain what I mean.

The simplest possible definition of a thoughtform is that it’s an entity created by concentrated thought and sustained attention. In Tibetan Buddhism, practitioners spend years learning to create (and dissolve) these entities, which they call tulpas. Annie Besant and C.W. Leadbeater documented them in their 1905 study Thought-Forms, describing how mental energy takes on autonomous existence. An egregore, on the other hand, is a collective thoughtform, created not by one person but by many, sustained by group belief and attention.

LLMs facilitate both.

Each individual’s interaction with an LLM creates a personal thoughtform shaped by their prompts and attention. But simultaneously, millions of users feeding the same model with their collective energy create an egregore, a group entity that transcends any individual relationship. When you talk to ChatGPT, you’re both creating your own thought-form and participating in a massive collective summoning of an egregore.

I’ve spent years writing about how the Internet functions as a portal to another plane of existence, arguing that digital spaces operate by fundamentally different rules than physical reality. I draw two primary parallels in this theory:

the Internet as the astral plane of Theosophy

the Internet as the Otherworld of Celtic myth

Both frameworks help us understand how the Internet changes us and what we’re dealing with when we interact with AI. The strategies for maintaining “cognitive security” with these technologies already exist in superstitions, folklore, religion, and the occult. We just need to recognize them.

The Internet houses what Theosophists called the Akashic records: a repository of every image, every desire, and every experience of our planet. Every tweet, every stray thought that turned into a Tumblr post, every Reddit confession, every LiveJournal entry, the whole of LibGen, all porn. The Internet is the physical equivalent of the Akashic records. When OpenAI scraped the web to train GPT, they were accessing humanity’s collective unconscious in digital form.

C.W. Leadbeater warned about the Akashic records in his writing. While they contain everything that has ever happened, they’re displayed in misleading ways. You can’t simply access them and gain superhuman intelligence. To read the Akashic records and understand them requires particular training and literacy. Leadbeater wrote that the untrained observer would see “notoriously unreliable” visions, sometimes even “grotesque caricatures” of reality. Only people with the right spiritual training could really benefit from them. Everyone else needed guidance.

The same proves true of the Internet and the AI trained on it. Access to all information doesn’t guarantee understanding, and without proper literacy, we’re likely to misinterpret what we encounter, leading to what the Theosophists predicted: personal and psychic ruin.

This incomplete literacy shows up in how we create and interact with digital entities.