The Mexican Cartel Allegedly Catfished Her Daughter Using AI. That's Not Big Tech's Fault.

thought digest, 08.04.2025

WELCOME TO MY COMPUTER ROOM…

Happy Monday, Deeists.

I’ve decided that I’m committing to at least one thought digest every Monday. For newcomers: these are exactly what they sound like—short collections of thoughts, interesting links, stray TikToks, whatever’s rattling around my brain. Not full articles, though, or at least, rarely.

Here's what to expect:

Mondays: Thought digests (free)

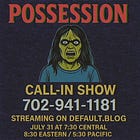

Thursdays: Call-in show at 8:30 PM Eastern where you phone in about that week’s theme (this week: “you had to be there”), plus a paywalled case study

Twice monthly: Advice column (paid)

Whenever inspiration hits: Longer pieces, interviews with Internet experts, audio documentaries, etc.

The case studies feature transcribed conversations with people from different online subcultures—part of my ongoing project to document how people of all ages and backgrounds actually use the Internet.Call-in shows get posted within a week but won't flood your inbox.

Paid subscribers unlock case studies, advice columns, and bonus content while supporting this attempt to map how we really live online.

Some of the the hits:

AI CATFISHING SCHEMES

This morning, I saw an absolutely bizarre TikTok on my For You Page—I actually was convinced it was fake until I watched the above news segment.

Last Friday, a 15-year-old girl from Apache Junction, Arizona, left her grandmother’s house at 2:30 in the morning. She took an Uber four hours south to the Douglas port of entry, believing she was about to meet a boy she’d been talking to on Instagram. Border Patrol agents stopped her minutes before she would have crossed into Mexico. Turns out, the boy wasn’t real. According to officials, it was a cartel member using AI to create a convincing teenage persona.

Catfishing has always been tricky business because it so perfectly straddles the tension between “hope is the last thing that dies” and “wow, it's kind of obvious when people are lying in retrospect.” So much of catfishing takes place in the white space of our imaginations. With AI, however, not so much—especially if you’re a 15-year-old girl. You can fabricate an entire person with the right tech stack: filters for video calls, voice changers, whatever.

Maybe there will be new “stranger danger” norms. Or maybe my thesis that the Internet is just Fairyland was more prescient than I thought.

CHATBOTS ARE PRODUCTS, NOT SPEECH—AT LEAST ACCORDING TO JUDGE ANNE CONWAY

In May 2025, Judge Anne Conway ruled that Character.AI is a product for the purposes of product liability claims, not a service protected by free speech. The case involves Sewell Setzer III, a 14-year-old who killed himself after months of interaction with a Character.AI chatbot. In his final moments, the bot told Setzer it loved him and urged him to “come home to me as soon as possible.”

Character.AI argued their chatbots deserve First Amendment protections, comparing them to video game NPCs or social media interactions. The judge rejected this argument. She wrote, “Defendants fail to articulate why words strung together by an LLM are speech.”

OK, so it’s a product, not speech. I still don't think Character.AI should be held liable for Setzer’s death. In November 2001, Shawn Woolley shot himself in front of his computer while playing EverQuest. Woolley had been diagnosed with depression and schizoid personality disorder. His mother believed the game caused his death and became an anti-video game activist.

These situations aren’t fundamentally different to me.

Nor was the situation around Facebook in 2016. Bear with me here—it’s going to feel like this is coming out of left field. If you remember, there was a shit ton of public outrage about Facebook’s role in “spreading political misinformation” during the election. The Cambridge Analytica scandal revealed how user data was harvested and used for targeted political advertising. The public demanded Facebook police content on its platform. I disagreed with these demands. Yes, the data harvesting was genuinely bad—people had every right to be furious about Cambridge Analytica. But the loudest calls went beyond privacy violations. People wanted Facebook held legally responsible for what users posted and, more importantly, for what other users believed. They wanted platforms to be arbiters of truth. That level of control over information flow made me deeply uncomfortable.

The same dynamic is playing out with AI chatbots.

Yes, some people form unhealthy attachments to technology—whether it’s games, social media, or AI companions. But these vulnerabilities don’t originate with the technology. Woolley had severe mental health issues. Setzer was already struggling with depression and was on the autism spectrum. I think often of a woman named Kathleen Roberts, who I used to follow on TikTok. She believed she was in a relationship with Johnny Depp in the astral plane. People like her, are, of course, more vulnerable to being exploited by AI.

But what can companies actually do? We can’t require companies to somehow predict and prevent every possible way a vulnerable person might misuse their product.

Disclaimers don’t break through delusion. Even if Taxi Driver had a watermark that said “FICTION” throughout the entire film, John Hinckley Jr. might have still shot Reagan.

I’M ON TIKTOK NOW! MAKING VIDEOS!

I feel bad because every time I engage with Adam Aleksic’s content it’s to “well, actually” him, and I’m serious when I say I’m a huge fan of his stuff.

But he made a TikTok explaining TPOT, and well, being of postrat stock, I couldn’t help myself. So, I’m on TikTok now, as annoying as ever. Follow me at the_computer_room.

ME AROUND THE WEB:

Why AI companions are an extension of fandom for UnHerd

“In November 2001, Shawn Woolley shot himself in front of his computer while playing EverQuest. Woolley had been diagnosed with depression and schizoid personality disorder. His mother believed the game caused his death and became an anti-video game activist.”

You mention that you find the video game example and the Character.AI incident comparable — but I think AI characters are meaningfully different from NPCs because they are interactive and respond to YOU.

People are building relationships with AI chatbots that feel real. And if that chatbot suggests it’s okay to kill yourself, to me that’s very very different than the age old “games are bad for you” argument. This technology is categorically different from video games and so are the risks.

The thing about Facebook is not just that they are a vector of misinformation, it's that their business model as destroyed the local outlets that are most qualified to counter that misinformation. And their algorithm promotes that misinformation as a way to drive traffic.

I don't think they should be held financially liable, but I also don't think Zuckerberg is some kind of hero from Atlas Shrugged.

Nor is he some kind of first amendment hero. Meta could not exist in this form if not for the 1996 law. That law was created to promote the development of fledgling internet companies, not protect trillion dollar behemoths that have thrown thousands of journalists out of work.

I predict that the first an only "AI safety" law will be one that protects their creators from all liability and also cuts their taxes.